Data

Overview

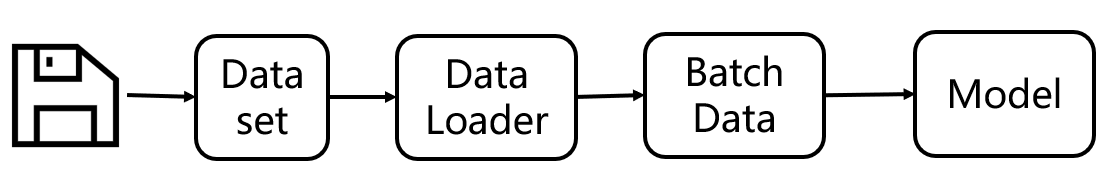

Datasetstores the samples and their corresponding labels.DataLoaderwraps an iterable around the Dataset to enable easy access to the samples and easy batching, shuffling and multiprocess data loading.

Dataset

3 magic methods in the Dataset class should be implemented:

from torch.utils.data import Dataset

class MyDataset__(Dataset):

def __init(self, *args, **kargs):

pass

def __len__(self):

"""Return the size of dataset when called by len(dataset)."""

pass

def __getitem__(self, idx):

"""Return the idx-th sample when called by dataset[idx]."""

pass

Dataloader

torch.utils.data.DataLoader supports for:

- map-style and iterable-style datasets

- customizing data loading order

- automatic batching

- single- and multi-process data loading

- automatic memory pinning

Dataset types

- Map-style datasets:

__getitem__and__len__ - Iterable-style datasets:

__iter__and__len__

Data loading order

- For iterable-style datasets, the data loading order is entirely controlled by the user-defined iterable.

Loading batched and non-batched data

DataLoader supports automatically collating individual fetched data samples into batches via arguments batch_size: how many samples per batch to load

- drop_last

- batch_sampler: iterable, a sampler that returns mini-batches of indices. batch_sampler is mutually exclusive with batch_size, shuffle, sampler, and drop_last.

- collate_fn: callable, merges a list of samples to form a mini-batch of Tensor(s). Used when using batched loading from a map-style dataset.

Single- and Multi-process Data Loading

- num_workers: how many subprocesses to use for data loading. 0 means that the data will be loaded in the main process. Generally speaking, num_workers are set to the number of available CPUs.

Pinning memory

Pinning memory makes data transfer between CPU and GPU faster, but it may also lead to increased memory usage. Therefore, you should only pin memory if you know it will improve the performance of your application, and be aware that it may indeed decrease performance in some cases.

API

The parameters of DataLoader are as follows:

- dataset: Dataset

- batch_size: the size of each batch

- shuffle: whether to shuffle the data

- sampler: defines the strategy to draw samples from the dataset.

- batch_sampler: like the sampler, but returns a batch of indices at a time.

- num_workers: how many subprocesses to use for data loading.

- collate_fn: merges a list of samples to form a mini-batch of Tensor(s).

- pin_memory: whether to copy tensors into CUDA pinned memory.

- drop_last: whether to drop the last incomplete batch.

Other useful APIs

concat: Concatenates a list of tensors along a given dimension.subset: Subset of a dataset at specified indices.Tips

Subset is the subclass of Dataset.

def __init__(self, dataset: Dataset[T_co], indices: Sequence[int]) -> None: self.dataset = dataset self.indices = indices def __getitem__(self, idx): if isinstance(idx, list): return self.dataset[[self.indices[i] for i in idx]] return self.dataset[self.indices[idx]] def __len__(self): return len(self.indices)random_split: Randomly split a dataset into non-overlapping new datasets of given lengths.sampler: Base class for all Samplers.RandomSampler,SequentialSampler,SubsetRandomSampler,WeightedRandomSamplerandBatchSamplerare subclasses ofSampler.The difference between `Sampler` and `BatchSampler`

When using

auto_collation, specifiedbatch_sampleraccording to,@property def _index_sampler(self): if self._auto_collation: return self.batch_sampler else: return self.sampler